OpenStack++ Infrastructure for Cloulet Management on the Edge

[edge-computing cloud-computing observability openstack This post is an extract of the article “A Migration-enhanced Edge Computing Support for Mobile Services in Hostile Environments”, presented at 13th International Wireless Communications and Mobile Computing Conference (IWCMC), 2017.

The advancements and diffusion of a wide range of smart devices increasingly more sophisticated and capable of differentiated forms of wireless connectivity has led to a pervasive presence of mobile devices in the every-day world. These devices are used in a wide range of contexts, from private mobile use to Smart City applications scenario where applications are focused on users’ engagement to solve tasks distributed everywhere in the environment.

Mobile devices will continue to grow and consolidate their position if infrastructure and applications can benefit from standard solutions and virtualization, such as cloud computing which allows anyone to rig a virtual cluster with large-scale computational resources instead of an expensive dedicated server. At the same time, in order to develop adaptive and autonomous systems, as well as infrastructure able to support a pervasive mobile devices usage, also in busy contexts workload, a two-layer model only based on cloud and mobile devices may be too simplistic and inefficient.

Cloud computing has not been designed to continuously collect and process a great amount of highly dynamic and heterogeneous data on the fly. Letting devices directly and independently communicate with the cloud might lead to a several issues:

- redundancy of requests and information traffic

- extremely high energy consumption

- high latency and location awareness due to the distance between cloud and mobile devices, mobility, security and privacy

- many applications that operate in hostile environments execute computation-intensive tasks and require strict requirements

Hostile environments are characterized by very high uncertainty of the available resources such as computational capability and limited or inconsistent bandwidth, unreliable networks, and need rapid deployment.

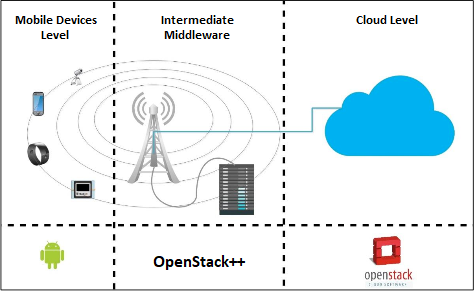

In this scenario, an additional intermediate layer between mobile devices and cloud is required to support all devices requirements and complement the cloud close to the devices. A Mobile Edge Computing (MEC) layer aims to bring computation resources near the edge of the network to guarantee to cope the mobile devices requirements overcoming many cloud limitations, e.g. latency, mobility support, location-awareness, scalability, etc., and to allow to work properly also in hostile environments overcoming typical issues such as device recovery, continuity of service, low bandwidth or unexpected scenarios.

OpenStack++ is a platform used as MEC intermediate middleware able to overcome the challenges of limited-resources mobile devices in hostile environment and to assist the mobile devices to preserve their functionalities and to supply the service to final users also in case of high mobility. Openstack is a project started in 2010 as a joint project of Rackspace Hosting and NASA and currently managed by Openinfra Foundation. It is a well-known and widely diffused open-source IaaS that provides many services that interact with each other to deliver the full feature set and to be able to manage computation, storage, and networking resources to supply dynamic allocation of VMs.

OpenStack++ is an extension of the Openstack infrastructure, with very similar functionalities, and specifically targeted to run the intermediate middleware layer and to support limited-resources devices, allowing to move computational resources near the edge of the network, by providing and orchestrating VMs near mobile devices. Thus, it is possible to dynamically add or remove features to the platform, in the same way used in Openstack to add extensions on the default version, by adding custom files into standard paths, e.g. cloudlet.py, cloudlet_api.py, etc.

In Openstack++ is possibile to:

- import a base VM to Glance image storage

- resume a base VM to create a customized VM and to create a new overlay for it

- create VM overlay (delta between the VM instance and the VM base image) from a running VM instance

- VM synthesis, provisioning a VM instance at a OpenStack++ cluster using a VM overlay

- VM handoff, migrating a VM instance to a different OpenStack++ cluster

The mobile devices layer is composed of some endpoints that needs to execute complex and highly-resource demanding executions, also in a hostile environment, and do not have enough capabilities to do that. On mobile devices, the execution of heavy images and video analysis and processing can be perform uniquely with computationally intensive techniques that require resources beyond the capacity of mobile devices, e.g. face recognition application for security purpose, able to monitor an environment with cameras which capture photos and videos of the surrounded area. To enable the application to be effectively useful and successful in real-world adoption, the processing time for any media must be limited in the order of few seconds, to be able to raise the alarm if something suspicious happens.

In a context like this, mobile devices do not have enough capabilities to satisfy the strict requirements, in particular, if considering the possible hostile environment where the devices could be immersed in. Thus, they must delegate to the MEC middleware layer the analysis tasks.

The cloud layer is used to assist the MEC intermediate middleware to provide its functionalities to deliver the services, as backup and global repository of components, i.e. all the base VMs images to use for nodes setup and all the overlays created by MEC nodes specific for every service supported. Moreover, the cloud has the responsibility to react and recover MEC nodes when anomalies or errors happens, minimizing the MEC nodes downtime through a web server that can be queried any time a MEC node needs a file or document.

The MEC nodes can be automatically discovered by the mobile devices within its subnetwork and advertises the services executed by using multicast messages with the type and the description of the middleware services performed. Once the mobile device contacts the middleware to execute the tasks needed, the middleware forwards to OpenStack++ the request for the creation of a VM to execute the task, returning to the mobile device the reference to invoke the specified VM for future direct communication VM-device.

The time needed by a VM to serve mobile devices requests can vary in relation to which configuration and files are already available on the MEC node, into OpenStack++ image repository:

- OpenStack++ has the base VM and retrieves the right overlay locally at runtime, thus, it can start to execute tasks to serve the mobile devices just after have added the overlay at the present base VM

- OpenStack++ has the base VM but does not have a local overlay of the service and it need to retrieve it from remote nodes or from the cloud, increasing the time needed to setup the node and be ready to serve devices requests

- OpenStack++ needs both base VM and overlay and needs a long time to retrieve the resources needed and configure itself at runtime, because of the usually big-size image base

In case the mobile devices move near a new MEC node and, thus, need to perform synthesis and handoff between two MEC instances, the mobile devices contain all the IP addresses of the MEC nodes used in the past and send a request to the new MEC node to start the migration, specifying the reference of the node that contains its current state.

In case of handoff the resource usage and the middleware latency are optimized, by loading in advance the VMs base image on the MEC nodes. In this way, to migrate a service the time and the network traffic generated are minimized because we just need the overlay, with all the target service files, to add to the base image in order to create a new VM with that reproduces the same service used previously.

About the application execution, the MEC nodes are equipped with OpenCV (Open Source Computer Vision Library), an open-source library that includes a set of algorithms to process media files. A VM obtains an image from mobile devices and, by using OpenCV, detects the human faces inside the image highlighting them with a red border.

OpenCV already contains the implementation of the classifier library for face recognition, based on several weak classifiers combined to produce the decision: the training procedure is composed of many weak classifiers, that perform simple checks. The resultant classifier is applied to all the region of interest of the target image to check if any location is likely to show the searched object, such as a face. Finally, the server stores locally a copy of the image with a red box around faces, while the number of detected faces are returned to the interested mobile clients.